Query caching in SQLAlchemy is an essential feature that enhances performance by storing the results of executed queries. When a query is executed, SQLAlchemy checks if the result is already present in the cache. If it finds a match, it retrieves the data from the cache instead of hitting the database again, significantly reducing the load on the database and speeding up response times.

SQLAlchemy employs a caching mechanism that can be configured at various levels. The primary approach involves using the Query object’s options method combined with the load option, which allows developers to specify caching behavior for specific queries.

Here’s a simple example of how to enable query caching using SQLAlchemy:

from sqlalchemy.orm import sessionmaker, scoped_session

from sqlalchemy import create_engine

from my_models import User

engine = create_engine('sqlite:///mydatabase.db')

Session = scoped_session(sessionmaker(bind=engine))

session = Session()

# Example query with caching enabled

users_query = session.query(User).options(load_only('name', 'email')).all()

In this example, the load_only option tells SQLAlchemy to cache the results of the query that retrieves only the name and email fields of the User model. This is particularly useful for reducing the amount of data loaded into memory and for optimizing performance, especially when dealing with large datasets.

Additionally, SQLAlchemy’s built-in caching allows for more fine-grained control over how and when to cache results. It can be configured to cache results based on specific criteria, such as the parameters passed to the query or the state of the database. This flexibility helps ensure that the cache remains relevant and useful across different application scenarios.

It’s also worth noting that SQLAlchemy uses a session-based cache, meaning that cached results are tied to the lifecycle of a session. When a session is closed, the cache is cleared. This behavior ensures that stale data does not linger in memory longer than necessary, but it also means that developers need to be mindful of session management when implementing caching strategies.

As developers consider the caching mechanism in SQLAlchemy, it’s important to understand how it interacts with the database and other components of the application. For instance, if the underlying data changes, the cached results may become outdated. Therefore, a strategy for cache invalidation is crucial to maintain data integrity.

One common approach to cache invalidation is to implement a time-to-live (TTL) strategy, where cached results are only considered valid for a specific time frame. After the TTL expires, the next query will fetch fresh data from the database and update the cache accordingly. This ensures that the application remains responsive while also providing relatively accurate data.

Understanding how query caching works in SQLAlchemy is just the beginning. It opens up a plethora of opportunities for optimizing application performance, but it also brings challenges that developers must navigate carefully to ensure effective and efficient use of the cache.

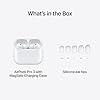

Apple AirPods Pro 3 Wireless Earbuds, Active Noise Cancellation, Live Translation, Heart Rate Sensing, Hearing Aid Feature, Bluetooth Headphones, Spatial Audio, High-Fidelity Sound, USB-C Charging

11% OffBenefits of implementing query caching

One of the most compelling benefits of implementing query caching is the significant reduction in database load. By avoiding repeated database queries for the same data, applications can handle more concurrent users and transactions without overwhelming the database server. This is particularly vital in high-traffic applications where database performance can be a bottleneck.

Additionally, query caching can lead to faster response times for users. When the application retrieves data from the cache instead of querying the database, the latency is dramatically reduced. This improvement in speed not only enhances user experience but also contributes to the overall efficiency of the application.

Another benefit is the cost-effectiveness associated with reduced database usage. Many cloud database providers charge based on the number of queries or the resources consumed. By caching queries, businesses can save on costs while maintaining performance. This financial incentive can be a strong motivator for teams to adopt caching strategies.

Moreover, caching can lead to better resource utilization within an application. When frequently accessed data is stored in memory, it frees up database resources for other operations. This balance allows for smoother execution of complex queries and transactions that may require more intensive processing.

Implementing query caching can also simplify application logic. With a robust caching strategy, developers can rely on the cache to handle repeated queries, reducing the need for additional query optimization and tuning. This allows teams to focus on other critical areas of the application, such as feature development and user interface enhancements.

However, it’s essential to consider the complexity that caching can introduce. While caching can provide numerous benefits, it requires careful management to avoid issues like stale data or cache misses. Developers need to establish clear guidelines on when to invalidate or refresh the cache to maintain data integrity.

In summary, the benefits of query caching in SQLAlchemy extend beyond just performance improvements. It offers a pathway to better resource management, cost savings, and simplified application architecture. Yet, as with any powerful tool, it demands a thoughtful approach to ensure that the advantages are fully realized without introducing new challenges.

Moving forward, developers should explore best practices for optimizing cache usage, ensuring that their applications can fully leverage the advantages of this powerful feature.

Best practices for optimizing query cache usage

So you’ve seen the light and want to start caching. Fantastic. But before you go wrapping every single query in a caching decorator, let’s be clear: blindly caching everything is a recipe for disaster. The first, and most important, best practice is to be ruthlessly selective. Not all queries are created equal. You want to cache data that is read frequently but updated infrequently. Think of things like user roles, application settings, or a list of product categories on an e-commerce site. Caching a user’s real-time stock portfolio? Probably not the best idea.

The goal is to identify the “hot paths” in your application—the queries that are executed over and over again. These are your prime candidates for caching. SQLAlchemy, when paired with a caching library like dogpile.cache, gives you fine-grained control to target these specific queries. You can create a caching region and then explicitly apply it only where it makes sense.

from dogpile.cache import make_region

from sqlalchemy.orm import Query

from my_app.orm import Session

from my_app.models import User

# Configure a cache region

region = make_region().configure(

'dogpile.cache.memory' # A simple in-memory cache

)

def get_user_by_id(user_id):

"""

This function demonstrates caching a specific query.

"""

session = Session()

# The cache_key is manually constructed to be unique for this user

cache_key = f"user:{user_id}"

# Use the 'get_or_create' pattern

user = region.get_or_create(cache_key, lambda: session.query(User).get(user_id))

return user

Of course, this brings us to the second-hardest problem in computer science: cache invalidation. It’s one thing to put data into the cache; it’s another thing entirely to know when to take it out. Get this wrong, and you’ll be serving stale, incorrect data to your users, which is arguably much worse than a slow page load. Your application’s correctness depends on a solid invalidation strategy.

The simplest invalidation strategy is a time-to-live (TTL). You tell the cache to expire an item after a certain period, say, 5 minutes. This is easy to implement but is a blunt instrument. A better, albeit more complex, approach is event-based invalidation. When the underlying data changes, you explicitly tell the cache to invalidate the corresponding entry. SQLAlchemy’s event system is perfect for this. You can listen for model update or delete events and trigger the invalidation logic.

from sqlalchemy import event

from my_app.models import User

@event.listens_for(User, 'after_update')

@event.listens_for(User, 'after_delete')

def receive_after_update(mapper, connection, target):

"""

Invalidate the cache for a User object when it's updated or deleted.

"""

cache_key = f"user:{target.id}"

region.delete(cache_key)

You also need to consider where your cache lives. The default in-memory cache is fine for a single-process application, but it’s completely useless in a modern web application running multiple worker processes or servers. Each process would have its own separate cache, leading to inconsistencies and wasted memory. For any serious application, you should use an external, shared caching backend like Redis or Memcached. This ensures that all your application instances share a single, consistent cache.

Finally, don’t fly blind. You can’t optimize what you can’t measure. Monitor your cache hit and miss rates. A high miss rate might indicate that your cache keys are wrong, your TTL is too short, or you’re simply caching the wrong things. A low hit rate means your cache is mostly just dead weight, consuming memory without providing a significant performance benefit. Adjust your strategy based on real-world data, not just assumptions.

These practices cover the common scenarios, but the real world is full of gnarly exceptions. What are some of the trickiest caching edge cases you’ve encountered in your own projects?