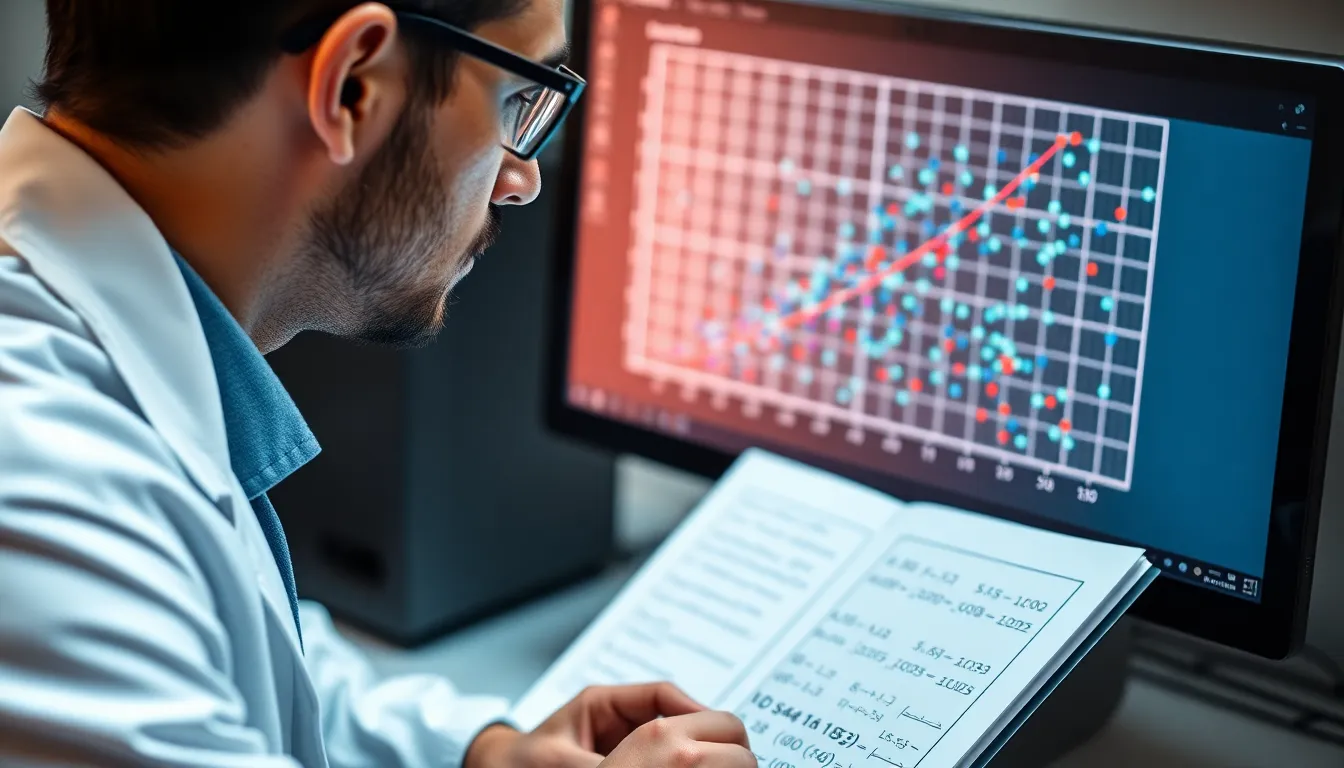

Outlier detection is a critical component in data preprocessing, especially when you need to ensure the integrity of your datasets. Outliers can skew the results of your analysis and lead to incorrect conclusions. That is particularly true in machine learning, where the presence of outliers can adversely affect model performance.

One of the simplest methods for outlier detection is the Z-score method. By calculating the Z-score of each data point, you can determine how far it deviates from the mean. A common threshold is a Z-score of 3 or -3, where any point beyond these values is considered an outlier. Here’s how you can implement this in Python:

import numpy as np

data = [10, 12, 12, 13, 12, 100, 12, 12, 12]

mean = np.mean(data)

std_dev = np.std(data)

outliers = []

for x in data:

z_score = (x - mean) / std_dev

if np.abs(z_score) > 3:

outliers.append(x)

print("Detected outliers:", outliers)

Another effective technique is the IQR (Interquartile Range) method. This method is robust against non-normal distributions and is based on the quartiles of the data. The IQR is calculated as the difference between the 75th percentile (Q3) and the 25th percentile (Q1). Any point that lies outside the range of Q1 – 1.5 * IQR and Q3 + 1.5 * IQR is considered an outlier. Here’s a quick example:

def detect_outliers_iqr(data):

Q1 = np.percentile(data, 25)

Q3 = np.percentile(data, 75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

return [x for x in data if x < lower_bound or x > upper_bound]

data = [10, 12, 12, 13, 12, 100, 12, 12, 12]

outliers = detect_outliers_iqr(data)

print("Detected outliers using IQR:", outliers)

Understanding the context of your data especially important. For instance, in a dataset related to user engagement metrics, a single user who dramatically spikes the average engagement time could be an outlier. However, if this user is a celebrity or an influencer, their behavior might be relevant rather than anomalous. Thus, the decision to remove or retain such outliers should be made carefully, considering the domain knowledge.

Once you’ve identified outliers, your next step is deciding what to do with them. You may choose to remove them, cap them, or even transform them based on the specific needs of your analysis. Each method has its own implications, and it’s important to analyze the impact of these decisions on the overall outcome of your model.

There’s also the option of using more advanced techniques like Isolation Forest or DBSCAN, especially when dealing with higher-dimensional data. These algorithms can help you identify outliers based on the density of the data points rather than solely relying on statistical measures. Here’s a quick way to implement Isolation Forest using scikit-learn:

from sklearn.ensemble import IsolationForest

data = [[10], [12], [12], [13], [12], [100], [12], [12], [12]]

model = IsolationForest(contamination=0.2)

model.fit(data)

outliers = model.predict(data)

print("Outliers detected (1 for inliers, -1 for outliers):", outliers)

As you can see, outlier detection is not just about identifying the odd data points; it’s about understanding the nature of the data you’re working with. The right approach can significantly enhance the quality of your analysis and the trustworthiness of your models. Remember, data integrity is paramount, and taking the time to address outliers can save you a lot of trouble down the line.

Understanding novelty detection for unseen data

Novelty detection, while closely related to outlier detection, serves a distinct purpose: it focuses on identifying data points that were not present during the training phase of your model. In practice, this means the model is trained solely on “normal” data, and when it encounters new, unseen data, it flags instances that deviate significantly from what it knows.

This distinction very important for applications like fraud detection, network security, or fault detection in manufacturing, where new types of anomalies might arise that you haven’t explicitly labeled or seen before. Unlike traditional outlier detection, which often focuses on static datasets, novelty detection is dynamic and designed to work in real-time systems or with streaming data.

In scikit-learn, the OneClassSVM and IsolationForest algorithms are commonly employed for novelty detection. The key difference is how you use these models: you train them on clean data with only the “normal” class, then use them to predict whether new samples are normal or novel.

Here’s a compact example demonstrating OneClassSVM for novelty detection:

from sklearn.svm import OneClassSVM

import numpy as np

# Training data containing only 'normal' instances

X_train = 0.3 * np.random.randn(100, 2)

X_train = np.r_[X_train + 2, X_train - 2]

# New data containing both normal and novel samples

X_new = 0.3 * np.random.randn(20, 2)

X_new = np.r_[X_new + 2, X_new - 2, np.array([[5, 5], [-5, -5]])]

model = OneClassSVM(gamma='auto').fit(X_train)

# Predicting on new data (-1 for novel, 1 for normal)

predictions = model.predict(X_new)

print("Predictions for new samples:", predictions)

Notice the “novel” points at [5, 5] and [-5, -5], which are far from the training clusters. The model should ideally label them as -1, signifying novelty. That is fundamentally different from classic supervised classification because you don’t train on labeled anomalies; the model has to infer novelty from the absence of those examples during training.

Understanding the hyperparameters is also essential. For OneClassSVM, nu controls the expected proportion of outliers (or novelties), and gamma defines the kernel coefficient influencing sensitivity to new data. Tweaking these values can help balance false positives and false negatives according to your application’s tolerance.

Alternatively, IsolationForest can be used similarly for novelty detection by setting contamination very low or to ‘auto’ and training on just the normal data. Because it works by isolating anomalies through random partitioning, it’s often more efficient for large, high-dimensional datasets:

from sklearn.ensemble import IsolationForest

X_train = 0.3 * np.random.randn(100, 2)

X_train = np.r_[X_train + 2, X_train - 2]

X_new = 0.3 * np.random.randn(20, 2)

X_new = np.r_[X_new + 2, X_new - 2, [[5, 5], [-5, -5]]]

model = IsolationForest(contamination=0.05, random_state=42).fit(X_train)

predictions = model.predict(X_new)

print("Predictions for new samples:", predictions)

One subtle but important distinction is that in novelty detection, you don’t want to include any anomalies in your training dataset, otherwise the model’s boundary of “normal” gets distorted. Data cleaning before training becomes paramount. This contrasts with outlier detection on an entire dataset, which assumes the dataset might be a mix of good and bad points from the start.

Deploying novelty detection systems is not without its challenges. For example, the decision boundary between normal and novel data can be complex and sometimes counterintuitive, necessitating a deep understanding of feature engineering and domain context. Incorporating dimensionality reduction techniques, like PCA or t-SNE, before novelty detection can sometimes help the model focus on the most relevant aspects of the data’s variation.

Lastly, when working with time series or sequential data, novelty detection gets even trickier because anomalies often depend on temporal context. Specialized models, such as LSTM-based autoencoders, come into play there, but the fundamental principle remains the same: train only on normal sequences, detect deviations as novelties.

In essence, novelty detection extends your analytical toolkit beyond what was previously observed, enabling your models to flag and adapt to the unknown. This capability is important for maintaining robustness in ever-changing environments where surprises are inevitable. Moving forward, we’ll look at practical guidelines for selecting the best detection model tailored to your dataset’s characteristics.

Choosing the right scikit-learn model for your use case

Choosing the right scikit-learn model boils down to understanding your dataset’s structure, dimensionality, and the nature of anomalies you’re expecting. If your data is fairly low-dimensional and roughly Gaussian, simple parametric methods like EllipticEnvelope—which assumes Gaussian distribution—might be enough. It models the data as an ellipse in feature space and detects points that fall outside with a Mahalanobis distance threshold.

Here’s a brief example using EllipticEnvelope:

from sklearn.covariance import EllipticEnvelope

import numpy as np

X = np.array([[2, 2], [3, 3], [2.5, 2.5], [8, 8]]) # Last point likely an outlier

model = EllipticEnvelope(contamination=0.1)

model.fit(X)

predictions = model.predict(X)

print("Predictions (-1 for outliers, 1 for inliers):", predictions)

If your data is more complex, with varied densities or non-convex clusters, then density-based models like DBSCAN or LOF (Local Outlier Factor) can be more suitable. LOF evaluates the local density deviation of a given data point with respect to its neighbors. If the local density is significantly lower, it flags it as an outlier:

from sklearn.neighbors import LocalOutlierFactor

import numpy as np

X = np.array([[1, 2], [2, 2], [2, 1], [8, 8], [2, 2]])

model = LocalOutlierFactor(n_neighbors=2, contamination=0.2)

outlier_flags = model.fit_predict(X)

print("LOF predictions (-1 for outliers, 1 for inliers):", outlier_flags)

Note that LocalOutlierFactor cannot be used as a novelty detector in isolation—it’s designed to identify outliers in the training data itself and doesn’t have a separate predict method on unseen data. If you need to perform novelty detection (this is, to detect outliers on new data), OneClassSVM or IsolationForest are preferable.

For high-dimensional data, algorithms like IsolationForest typically shine. Its random partitioning strategy means it’s not heavily influenced by the curse of dimensionality like distance or density based methods. Furthermore, it scales well for large datasets, a practical consideration as dimensionality and dataset size grow. A typical workflow would look like this:

from sklearn.ensemble import IsolationForest

import numpy as np

X = np.random.randn(1000, 50) # High-dimensional data

model = IsolationForest(contamination=0.05, random_state=0)

model.fit(X)

# Predict on new data

X_new = np.random.randn(10, 50)

preds = model.predict(X_new)

print("IsolationForest predictions on new data:", preds)

Choose IsolationForest if your data is large or high-dimensional and you want scalability with decent outlier detection quality out-of-the-box. Its hyperparameters—especially n_estimators and max_samples—can be tuned for a tradeoff between accuracy and compute expense.

Keep in mind your use case’s tolerance for false positives and false negatives. For example, if the cost of missing an anomaly is high (fraud detection), prefer models or hyperparameters that produce fewer false negatives, even if that means more false positives. Often the contamination parameter is your lever here, specifying the expected proportion of outliers upfront.

Also, examine whether your dataset contains labeled anomalies or if it’s entirely unsupervised. If you have labeled anomalous points, supervised or semi-supervised anomaly detection models like Isolation Forest with an explicit decision threshold or more sophisticated ensemble methods could be trained to better discriminate outliers.

Finally, consider model interpretability. Models like LocalOutlierFactor or simple statistical methods are easier to explain to stakeholders compared to kernel-based OneClassSVM or ensemble models. If you need that transparency, lean towards simpler models or augment complex ones with explainability tools like SHAP or LIME.