Posted inPython modules PyTorch

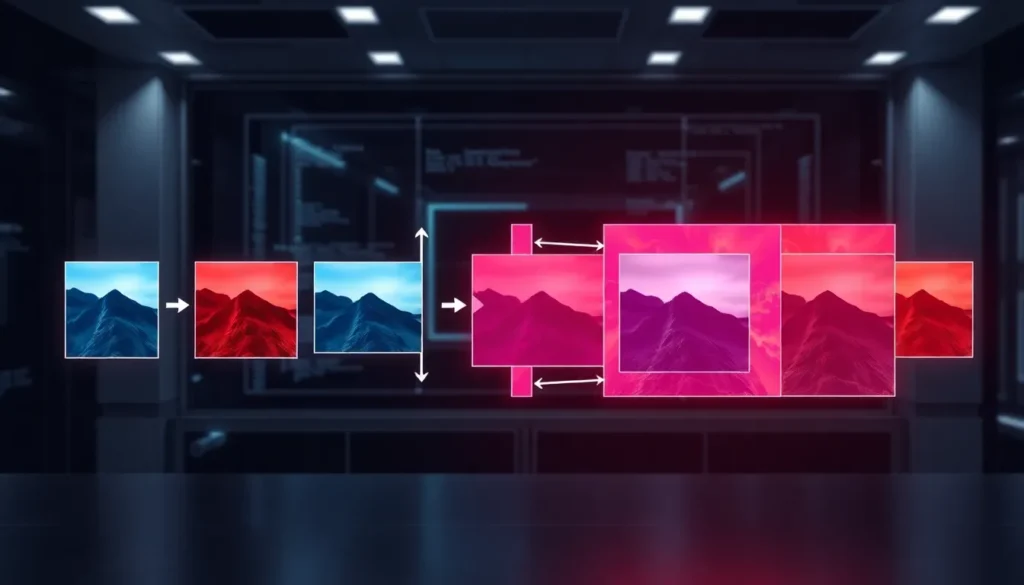

Advanced PyTorch Techniques for Image and Video Processing

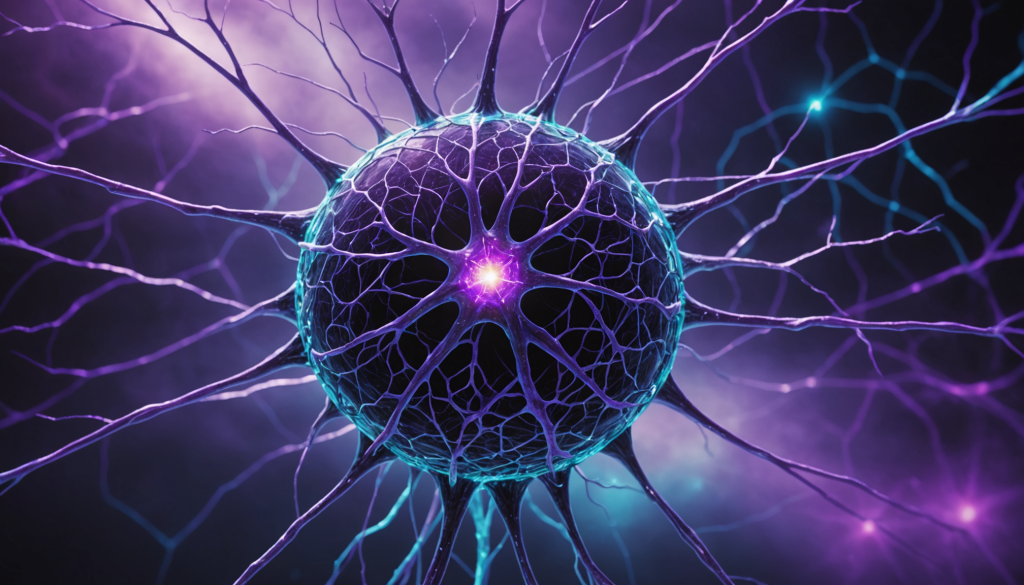

Video data analysis leverages temporal dependencies through Recurrent Neural Networks (RNNs), specifically Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU). These models address vanishing gradients and enhance video classification tasks by integrating spatial features from CNNs and capturing long-term dependencies essential for video understanding.